Introduction

Have you noticed that your content isn't getting the attention it used to, even though it's ranking well?

As AI-powered search engines like ChatGPT, Perplexity, and Google’s Search Generative Experience (SGE) take over, content that isn’t optimized for Large Language Models (LLMs) may get overlooked.

In 2026, if you're not optimizing for LLMs, your content could be invisible to the users searching for it.

Old-school SEO strategies, such as using keywords and building backlinks, are no longer enough. Now, it’s all about LLM SEO, creating content that is structured and relevant, so AI systems can easily understand and share it.

If your content isn’t built for these systems, it could be ignored in favor of quick, AI-generated answers.

With 15% of search results now featuring AI-driven overviews that provide direct answers, many B2B brands are seeing a drop in traffic and clicks. If you’re facing the same issue, it might be because your content isn’t optimized for LLMs.

At Revv Growth, we help B2B brands optimize their content for LLMs, so you don’t miss out on valuable AI-driven search traffic. Let us help you stay ahead in this new era of search.

To stay competitive in this new scenario, it’s crucial to understand what LLM search optimization is and how it differs from traditional SEO. Let’s dive into the details of what makes content LLM-friendly and why it matters.

What Is LLM Search Optimization?

LLM (Large Language Model) Search Optimization is an advanced approach to optimizing content so that it performs well with AI systems, specifically large language models like ChatGPT, Google’s AI (SGE), Claude, and other generative AI tools.

Unlike traditional SEO, which has typically been centered on keyword optimization, backlinks, and page authority, LLM optimization focuses on enhancing how AI interprets and generates answers based on the content it processes.

Traditional SEO focuses on ensuring that search engines understand and rank your content based on relevant keywords, page speed, user experience, and quality backlinks. While these factors still play a role in LLM optimization, the focus shifts toward semantics: how the content is structured and the meaning behind the words, rather than just their placement.

LLMs are not simply looking for keywords but are trained to understand context, entities, and the relationships between them to generate coherent and relevant answers.

How LLMs Read and Generate Answers

LLMs process information differently from traditional search engines. Instead of relying on keyword matching, they focus on meaning, context, and relationships between words. Here are the key factors:

- Semantic Structure:

LLMs interpret content based on the relationships between words and concepts. Well-organized content with clear headings and logical flow is easier for AI to process.

- Entity Mapping:

LLMs excel at identifying and connecting entities (e.g., names, places, concepts). For instance, if content mentions "Tesla" and "electric cars," the model understands Tesla as an electric car company.

- Factual Reliability:

LLMs prioritize factually accurate content. Well-researched and fact-checked content helps improve how LLMs interpret and use it.

Examples of Where LLM Optimization Applies

LLM optimization is crucial in AI-driven platforms that generate answers directly from content:

- ChatGPT Answers: Optimized content helps ChatGPT generate more accurate and contextually relevant responses.

- Google AI Overviews: Google’s AI-driven overviews feature optimized content, providing instant answers in search results.

- Claude: Similar to ChatGPT, Claude benefits from structured, accurate content to deliver precise responses.

- Perplexity Snippets: Optimized content with clear structure and semantic depth has a higher chance of being featured as a snippet in Perplexity.

In each of these examples, LLM optimization helps content be selected by AI systems, providing clear, accurate, and contextually relevant answers in response to user queries.

To leverage LLMs in search optimization, it’s key to apply strategies that make your content more accessible and relevant to AI systems. Let’s explore the principles and techniques to optimize your content for LLMs.

8 Core Techniques to Optimize for LLMs in 2026

Optimizing for LLMs in 2026 requires specific strategies that align with how these AI systems process and present content.

Here are eight key techniques that will help ensure your content is recognized, understood, and effectively ranked by AI tools like ChatGPT, Google SGE, and others.

1. Use Structured Content with Clear Hierarchy

LLMs thrive on content that is logically organized and easy to parse. This involves using a clear hierarchy to break down complex information.

- H1-H4 Usage: Structure your content with a clear flow, starting with H1 for your main topic and H2-H4 for subsections. This hierarchy helps both readers and LLMs navigate your content.

- Short Paragraphs: Keep paragraphs concise and focused. AI systems often pull information from snippets, and long, dense paragraphs are harder for both humans and LLMs to digest.

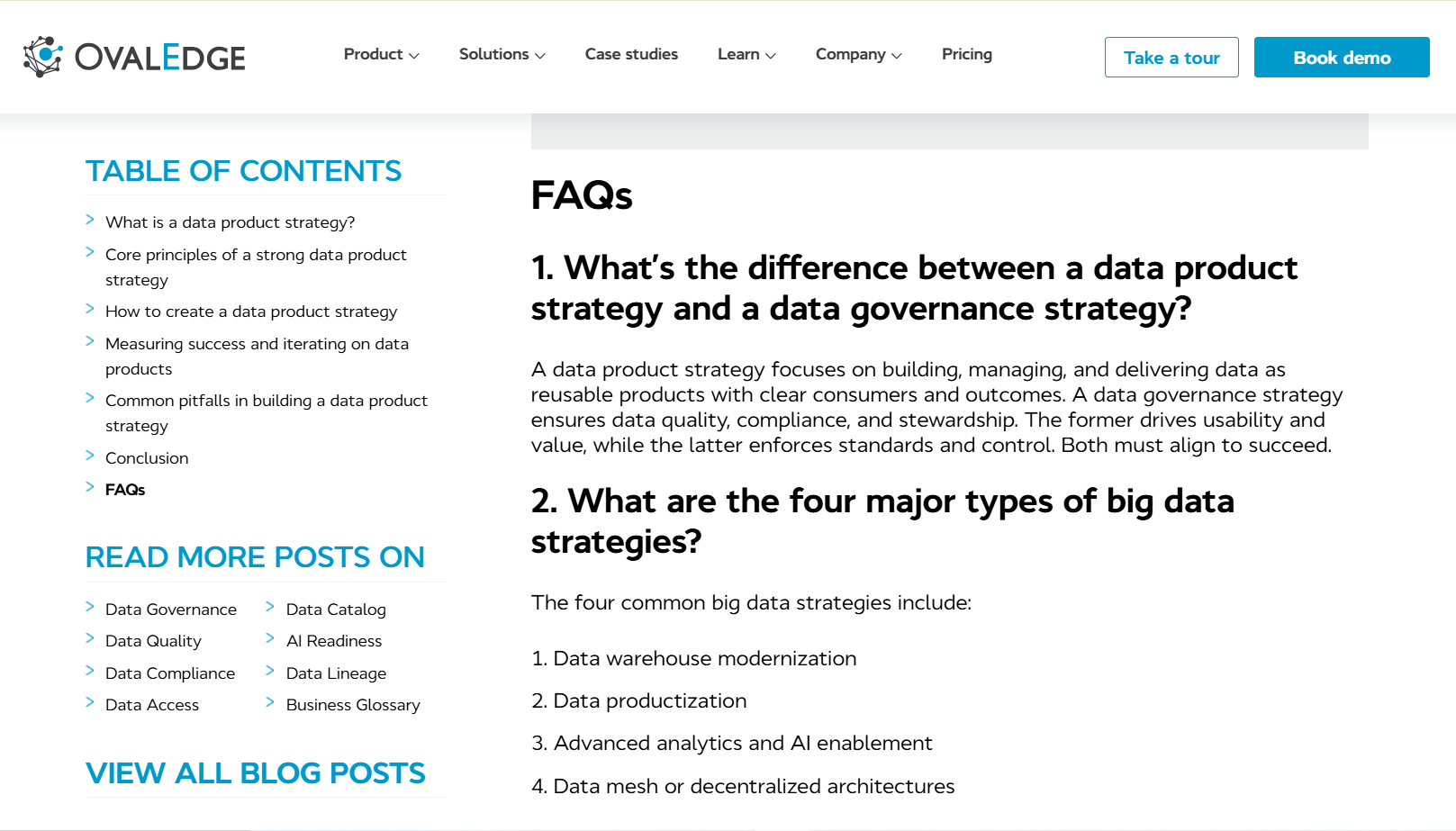

- Definition Blocks and FAQs: Integrate clear definitions and FAQ sections where appropriate. These formats align well with the question-and-answer formats LLMs favor.

For instance, we include FAQs at the end of every blog post for our client Ovaledge. This helps clarify common questions that may not be addressed in the main content, improving both user experience and content discoverability by LLMs.

2. Leverage Semantic SEO & Topic Clustering

LLMs prioritize context over keywords. To optimize for this, your content needs to reflect a deep understanding of a topic, not just surface-level information.

- Depth and Breadth of a Topic: LLMs appreciate comprehensive content that not only covers the main points but also addresses related subtopics and variations.

For instance, if writing about "content marketing strategies," explore subtopics like SEO, social media marketing, email marketing, and content creation tools to show the full scope of the topic.

- Related Queries and LSI Terms: Include Latent Semantic Indexing (LSI) terms and related queries throughout your content. These are words and phrases that are closely associated with the main topic.

For example, for a blog post about "AI in healthcare," LSI terms might include "machine learning in healthcare," "AI diagnostic tools," and "AI healthcare applications."

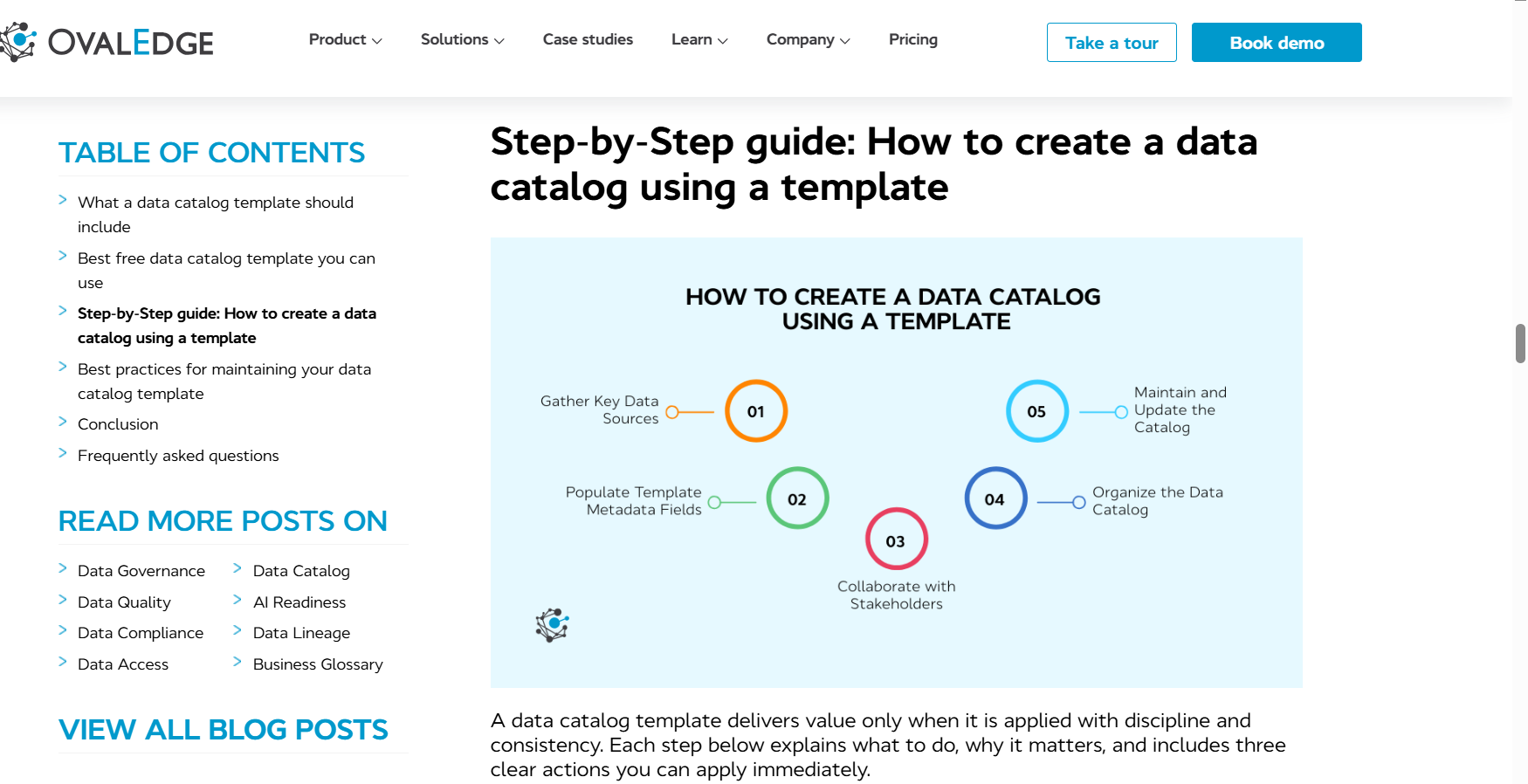

3. Add Schema Markup & Metadata for Clarity

Schema markup enhances how machines interpret your content. By providing extra metadata about your content, schema makes it easier for LLMs to pull information from your site.

- FAQ, How To, Article, and Product Schema: These types of structured data allow machines to understand the content and context more effectively.

In our blog on "Data Catalog Templates" for Ovaledge, we included a "How to Create a Data Catalog Template" section, explaining the process in detail. This not only improved readability but also made the content easier for search engines to interpret.

And if your article discusses “how to train a machine learning model,” using the HowTo schema will allow search engines to feature your content in a rich snippet when users search for specific steps, making it more discoverable.

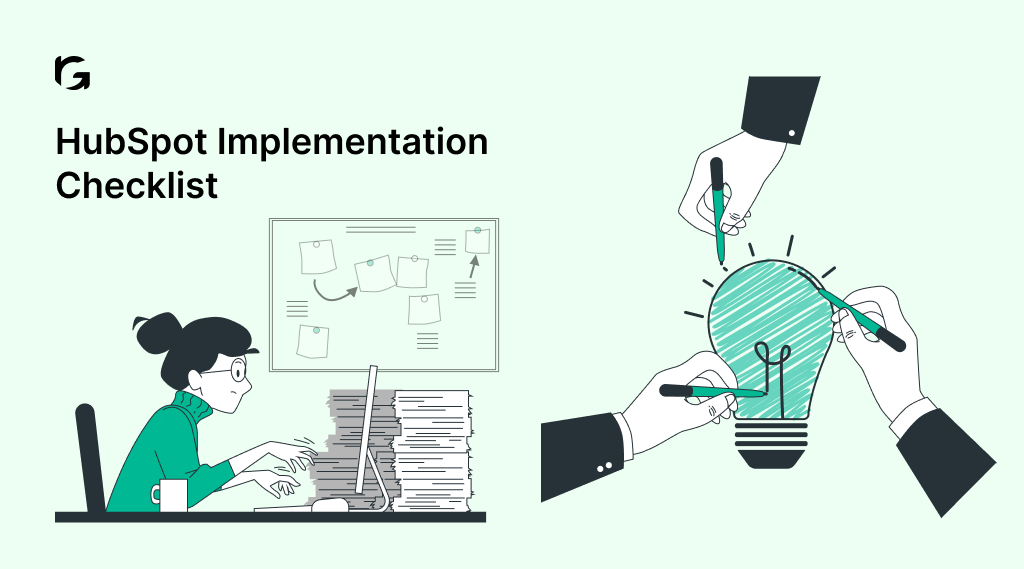

4. Optimize for Featured Snippets and AI Overviews

Featured snippets and AI overviews provide direct answers in search results, and LLMs prioritize content that aligns with these formats.

- Direct-Answer Formats: For LLMs to pick up on your content as a featured snippet, use clear, concise direct answers.

For example, if you're answering a question like "What is content marketing?", a simple, direct response would be:

Content marketing is a strategy focused on creating and distributing valuable, relevant content to attract and engage a target audience.

- Numbered Lists and Bolded Key Phrases: These help LLMs identify the main points quickly.

For example, in a list of “Top 5 Content Marketing Strategies,” a numbered list with bolded headings will make it easier for AI systems to extract key points.

5. Entity Optimization & Internal Linking

Entities are central to how LLMs understand and connect information. Optimizing content with clear entity mapping ensures AI can connect the dots.

- Entity Mapping: Ensure your content highlights key entities, whether they are people, places, or products.

For example, if your content is about AI in healthcare, include entities like "AI diagnostics," "machine learning," "healthcare providers," and "data analytics tools" to help LLMs build a web of interconnected information.

- Internal Linking: By linking to related pages within your site, you provide a roadmap for LLMs to understand the relationships between terms.

For example, an article on “AI in Healthcare” might link to an internal page about “The Role of AI in Diagnostics” or “AI and Patient Data Security,” helping AI models understand the content's context.

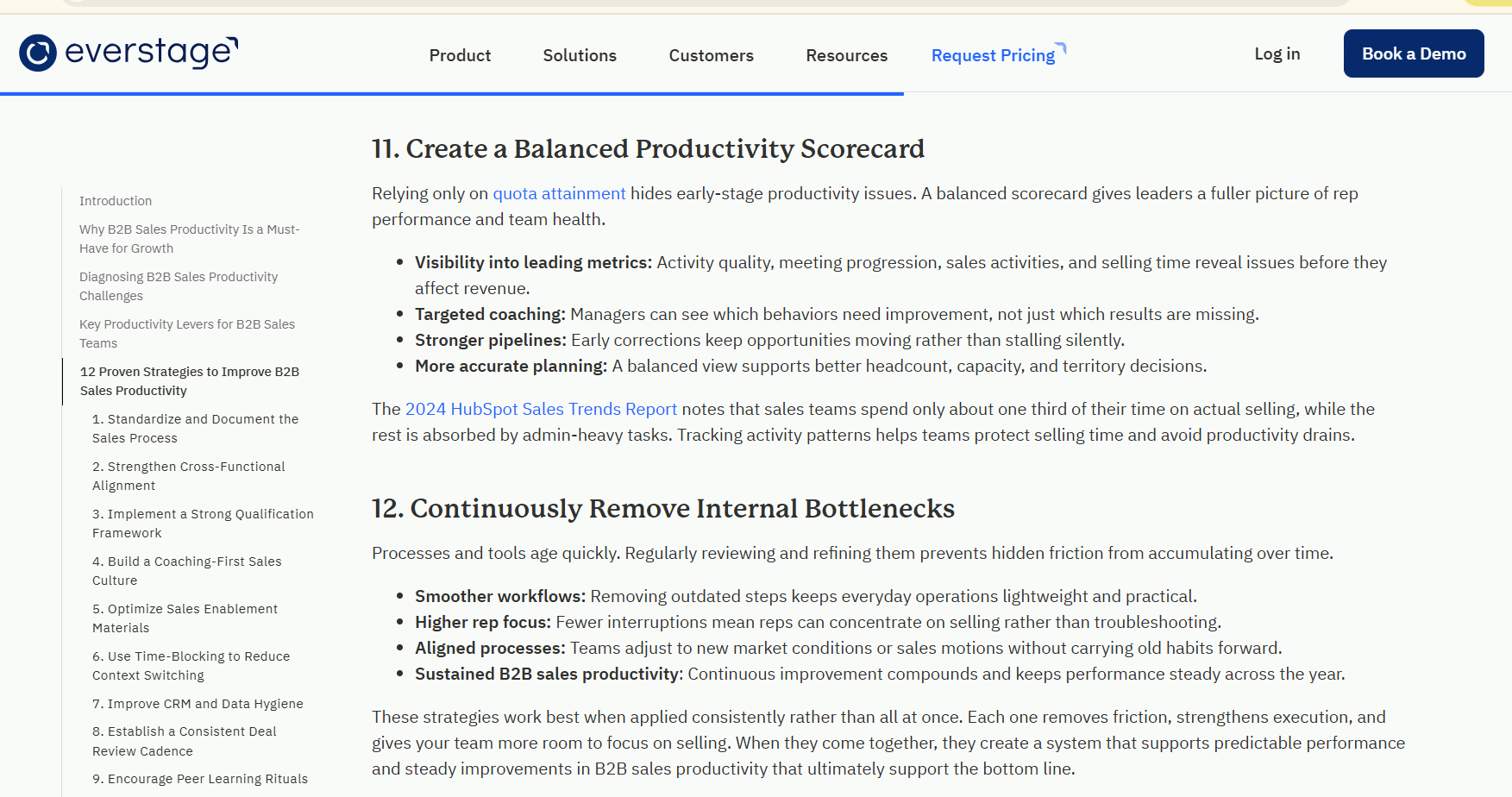

6. Source Citations and Factual Anchors

LLMs prioritize content backed by credible, verifiable sources. Linking to authoritative sources adds credibility to your content and helps AI determine its reliability.

- Credible Sources: LLMs favor content that cites reliable, factual data.

For example, in our blog on "Why B2B Sales Productivity is a Must-Have for Growth" for our client Everstage, we referenced a HubSpot report to add value and credibility.

- Factual Anchors: Anchoring claims with verifiable facts helps LLMs recognize that your content is grounded in reality.

For instance, if you state that "AI can predict disease outbreaks," back it up with a citation to a recent study from a trusted medical organization.

7. Include Zero-Click Friendly Formats

Zero-click content provides users with immediate answers without having to click through to a website. Optimizing your content for this format helps boost its chances of being featured in search results.

- Answer Boxes, Definitions, Pros/Cons, Comparison Tables, Stats: These formats cater to users looking for quick, bite-sized information.

For instance, if you’re writing about “Top AI Tools for 2025,” a comparison table that outlines the features of each tool can help ensure your content is featured as a zero-click result.

8. Fine-Tune Content for Retrieval in Chatbots

As AI-driven chatbots become more prevalent, optimizing your content to align with their conversational style is crucial.

- Q&A Patterns: Write your content in a way that anticipates common questions and provides clear, concise answers.

For example, if you're writing about “AI in Marketing,” structure content with frequent Q&A patterns like:

What is AI in marketing?

AI in marketing refers to using artificial intelligence technologies to analyze data, automate tasks, and optimize marketing strategies.

- Natural Phrasing: Since chatbots are designed to understand natural language, ensure your content is written in a conversational, natural tone. Avoid overly technical or jargon-heavy language unless necessary.

To put these optimization techniques into practice, several tools can help streamline the process and provide the insights needed to refine your content for LLMs. Let’s explore some of the top tools available for optimizing content for LLM search.

Tools to Help You Optimize for LLM Search

Successfully optimizing for LLMs requires a mix of content analysis, semantic optimization, and performance tracking. Below are some key tools to help you get there:

1. Surfer SEO, Clearscope, Frase, Jasper for NLP Optimization

- Surfer SEO

Known for its ability to analyze SERPs and generate optimized content strategies, Surfer SEO also includes NLP (Natural Language Processing) features to help you align content with semantic relevance and keyword intent.

Surfer’s audit and content editor tools can optimize your writing to meet LLM requirements by ensuring your content is semantically rich and structured for easy AI interpretation.

- Clearscope

This tool excels at content optimization by analyzing top-ranking pages for a given keyword and providing LSI (Latent Semantic Indexing) suggestions.

Clearscope helps ensure that your content is comprehensive, using semantic SEO techniques that LLMs favor, enabling better visibility in AI-driven results.

- Frase

Frase uses AI to assist with content creation and optimization. Its NLP-powered tools help generate content based on real search intent and LLM preferences.

With Frase, you can easily identify key entities, related topics, and semantic variations that will improve content relevance for AI.

- Jasper

A powerful AI writing assistant, Jasper uses NLP to create content that aligns with semantic search intent. It’s particularly useful for generating content that fits LLM optimization standards by focusing on clarity, context, and relevance.

2. Schema Generators (Merkle, TechnicalSEO.com Tools)

- Merkle Schema Generator

Merkle Schema Generator helps generate structured data markup like FAQ, HowTo, and Article schemas that make your content more understandable to LLMs and search engines.

Using schema markup effectively can ensure that AI systems correctly interpret your content and pull accurate information for overviews and snippets.

- TechnicalSEO.com Schema Generator

TechnicalSEO.com Schema Generator is designed to help with the implementation of schema across your content. It generates clear and easy-to-understand schema markups, improving the ability of LLMs to interpret and display your content in rich snippets or AI-driven overviews.

3. Platforms Tracking SGE/AI Performance: AlsoAsked, Content Harmony, SparkToro

- AlsoAsked

AlsoAsked tracks the "also asked" queries related to your target keywords, helping you optimize content for long-tail searches and related questions that LLMs might pull in.

By understanding how related queries are presented to users, you can structure your content to target more relevant, high-traffic opportunities.

- Content Harmony

Content Harmony provides content research and optimization tools that assist with an AI-focused content strategy.

Its ability to help identify content gaps and analyze competitor strategies ensures your content aligns well with the search intent, driving AI responses.

- SparkToro

While SparkToro is known for audience research, it also provides valuable insights into how search engines and AI tools interpret and rank content.

By tracking the performance of content across various AI platforms like Google’s SGE, SparkToro helps identify trends and optimize for visibility in AI-driven searches.

These tools provide the necessary resources to help you create and track LLM-optimized content, ensuring your material is structured, semantically rich, and ready for the future of search.

With the right tools and techniques, several brands have successfully optimized their content for LLM search, resulting in impressive rankings and visibility. Let’s take a closer look at how some of these brands are winning with LLM SEO.

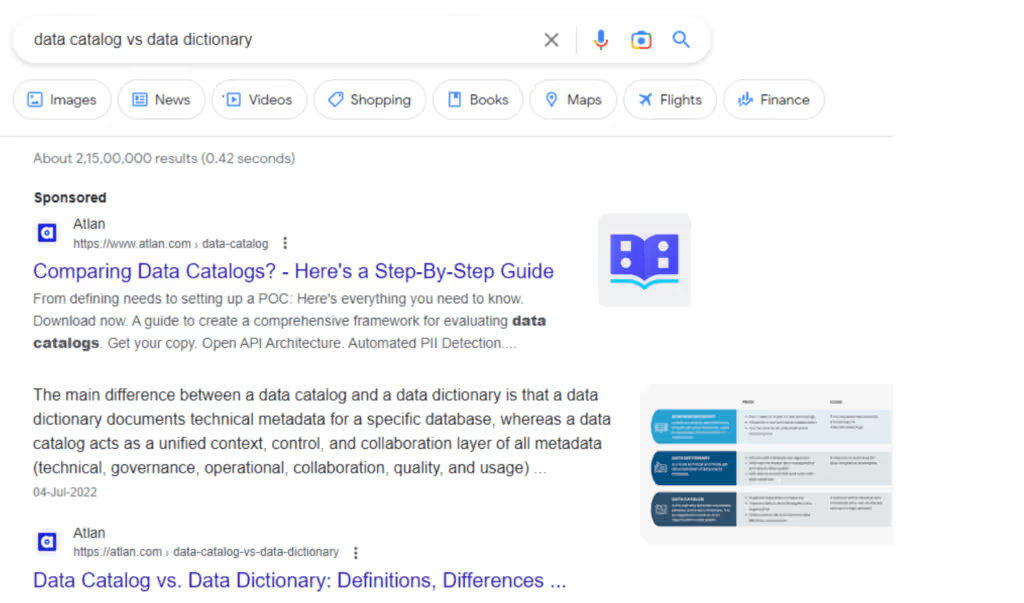

Case Studies: Brands Winning with LLM SEO

One standout example of LLM optimization is Atlan, a company that has harnessed AI to drive significant success in search engine rankings.

Atlan’s Success with RevvGrowth: AI-First Editorial Engine

To show how LLM optimization plays out in the real world, it helps to look at brands that have shifted from traditional SEO to AI‑first strategies and are seeing tangible results in visibility, traffic, and business impact.

One of the most compelling examples of LLM search optimization in action comes from Atlan, a data collaboration platform that partnered with Revv Growth to transform its content strategy and win featured snippets and AI‑generated placements across platforms.

Atlan’s Success with RevvGrowth’s AI‑First Editorial Engine

Atlan faced a common challenge for SaaS companies: strong organic rankings that still weren’t translating into meaningful visibility within AI‑driven search features.

Their content was ranking in the top 10, but it wasn’t being cited in Google AI Overviews, ChatGPT responses, or other generative search results, places where modern buyers increasingly start their research.

RevvGrowth built an AI‑first editorial engine designed to increase AI visibility as well as traditional rankings. The goal was to create a scalable content system that met both semantic and structural criteria used by LLMs when generating answers.

Here’s how they executed it:

- Semantic Clustering and Topic Intelligence

Instead of publishing isolated pieces of content, the team mapped topics by intent and semantic relationships. This ensured each blog post fed into a larger topical ecosystem, helping LLMs understand how concepts were connected. Keywords were grouped into clusters that reflected how real users and AI agents think and query.

- Snippet‑Ready Formats and Structured Content

RevvGrowth optimized content for direct answer formats that generative systems prefer, definitions, bullet lists, numbered steps, and concise explanation blocks early in the article. For queries like “data governance committee” or “modern data stack,” this formatting made it easier for AI systems to extract and surface answers.

- AI Tools + Human Quality Control

Tools such as ChatGPT, Frase, and Perplexity were used to assist with outline generation, semantic optimization, and intent analysis. Human editors then refined this output for accuracy, context, and brand voice, raising E‑E‑A‑T signals in the process.

- Automated Workflows and Consistency

By integrating AI tools with editorial workflows and CMS systems, Atlan was able to scale production, publishing over 130 SEO‑optimized blogs per month, without diluting quality.

Impact and Results

Within a short period, Atlan saw measurable improvements in LLM visibility and business outcomes:

- Multiple featured snippets and AI Overview placements for high‑intent search terms.

- Significant increases in organic visibility across Google AI Overviews, ChatGPT, and Perplexity summaries.

- Content being cited directly by large language model responses is a key signal of AI‑driven authority.

- Faster time to visibility thanks to structured, snippet‑aligned content rather than traditional keyword‑centric pages.

Atlan’s experience shows that winning in today’s search patterns requires more than high rankings. You need content that is intentionally structured for AI interpretation, semantically rich, and aligned with how LLMs extract answers.

While the strategies we've discussed can significantly boost your chances of ranking in AI-driven search results, it's important to be aware of common pitfalls that can hinder your progress. Let’s dive into some key mistakes to avoid when optimizing for LLMs.

Pitfalls to Avoid When Optimizing for LLMs

As you implement LLM optimization strategies, it's crucial to avoid some common mistakes that can undermine your efforts. Here are a few key mistakes to watch out for:

- Over-Relying on Keyword Stuffing Instead of Semantic Context

One of the biggest mistakes when optimizing for LLMs is focusing too much on keyword density. While keywords are still important, LLMs prioritize context and meaning over exact matches. Overstuffing your content with keywords can lead to poor readability and a lack of semantic depth, making it harder for AI to extract accurate information.

- Ignoring Citation and Factual Integrity

LLMs favor content that is accurate and backed by credible sources. Neglecting to cite trustworthy references or including unverifiable claims can result in your content being flagged as unreliable. To ensure high visibility in AI search results, always back up your statements with data from reputable sources and authoritative research.

- Not Testing How Your Content Appears in AI Tools

It's crucial to test how your content performs in actual AI tools like Google’s SGE, ChatGPT, or other generative systems. Without proper testing, you may miss key formatting issues, contextual misinterpretations, or potential improvements in how your content is displayed. Ensure your content is optimized for AI retrieval by regularly testing its visibility and effectiveness across these platforms.

To avoid these common pitfalls and ensure your content is fully optimized for LLMs, it's important to take strategic steps toward building a robust, AI-friendly content engine. Let’s now look at the next steps for building an LLM-optimized content strategy that delivers long-term success.

Next Steps: How to Build an LLM-Optimized Content Engine

Building an LLM-Optimized Content Engine enables you to harness the power of large language models (LLMs) to create highly effective, scalable content. Here’s how to set up an efficient content engine that enhances both quality and performance.

1. Define Your Content Strategy

To create an LLM-optimized content engine, start by clearly defining your content strategy. Establishing your objectives, understanding your audience, and choosing content formats will ensure your engine is focused and aligned with your goals.

How You Can Do It

- Identify Content Goals: Clearly define your content objectives, whether it’s driving traffic, improving SEO rankings, increasing conversions, or educating customers.

- Segment Your Audience: Develop detailed buyer personas to tailor content for each stage of the buyer's journey (TOFU, MOFU, BOFU).

- Choose Content Types: Based on your goals and audience segments, decide on content formats (blog posts, landing pages, FAQs, case studies, etc.).

Defining your content strategy ensures that every piece of content you produce is purposeful and aligned with your business objectives. A well-defined strategy sets a strong foundation for the effectiveness of your content engine.

2. Optimize for SEO with LLM Capabilities

SEO is essential for content visibility. Leveraging LLM capabilities can enhance your content’s SEO by automating research, optimizing structure, and ensuring comprehensive keyword targeting.

How You Can Do It

- Keyword Research Automation: Use LLM-powered tools to analyze top-ranking content, uncover high-performing long-tail keywords, and identify keyword gaps in your niche.

- Content Mapping: Use LLMs to create topic clusters around core themes, ensuring a comprehensive coverage of keywords and related terms.

- On-Page Optimization: Automatically generate SEO-friendly meta descriptions, title tags, and optimize content structure with relevant headings and internal links.

By optimizing for SEO with LLM capabilities, you ensure your content ranks higher, attracts more traffic, and is positioned to meet your audience’s needs. This step is vital in making your content discoverable and impactful.

3. Integrate AI Content Generation into Your Workflow

Integrating AI content generation into your workflow can save significant time and resources. LLMs can handle content drafting, making the process more efficient, while humans focus on refinement and strategy.

How You Can Do It

- Content Brief Automation: Set up AI to create detailed content briefs that outline the target keyword, structure, tone, and key points for writers.

- AI-Assisted Drafts: Use LLMs to generate first drafts or sections of content, reducing manual writing time. Focus human efforts on editing, strategy, and creativity.

- Batch Content Generation: Implement LLM tools to produce large quantities of content at once, especially for repetitive content types like product descriptions, FAQs, or blog posts.

Integrating AI into your content workflow ensures faster production without compromising on quality. The combination of LLM-generated drafts and human input allows for scalability while keeping content fresh and relevant.

4. Focus on Quality with Human-LLM Collaboration

While LLMs can generate high volumes of content quickly, maintaining quality requires human oversight. By collaborating with LLMs, you can ensure content resonates with your audience and meets brand standards.

How You Can Do It

- Human Editing and Fact-Checking: Always have content reviewed and refined by human editors to ensure factual accuracy, tone consistency, and creativity.

- Personalization and Emotion: Use LLMs to generate personalized content, but ensure that human insights add emotional appeal and connection where necessary.

- A/B Testing with LLM Outputs: Experiment with multiple versions of the same content generated by LLMs to find which one resonates most with your audience. Use these results to refine future content.

The combination of LLM efficiency and human creativity ensures content is both high-quality and on-brand. Regular human oversight is key to making sure the content resonates with your target audience and drives meaningful engagement.

5. Measure, Analyze, and Refine Your Content Engine

To make sure your LLM-powered content engine is delivering value, measuring its performance, and iterating is key. By analyzing metrics and continuously refining your strategy, you can stay ahead of trends and optimize your engine for better results.

How You Can Do It

- Track Performance Metrics: Monitor traffic, conversion rates, user engagement, and rankings to measure content effectiveness.

- AI-Powered Analytics: Use AI tools to perform deeper content performance analysis, identify high-performing content patterns, and suggest optimization opportunities.

- Iterate and Improve: Regularly analyze results and use insights to improve the content engine, whether through updated topics, LLM model fine-tuning, or new keyword strategies.

Measuring and refining your content engine ensures it remains aligned with your business objectives and audience needs. With continuous optimization, your content strategy will stay competitive and effective.

By following these five steps, you'll be well on your way to building an LLM-optimized content engine that scales content production, enhances quality, and ultimately drives more results. This structured approach empowers both AI and human collaboration to deliver high-impact content.

Conclusion: From Keywords to Concepts–The Future of SEO Is Here

SEO as we know it is evolving, and the shift toward AI-driven search is here to stay. It's no longer just about ranking for keywords; it's about creating content that resonates with intelligent systems like Google SGE, ChatGPT, and other LLMs that prioritize context, semantics, and clarity.

As more brands shift to AI-first strategies, they’re discovering how to optimize their content to not only rank in traditional search but also be featured in AI overviews, snippets, and chatbot responses.

The future of SEO isn’t just about keywords; it’s about content that speaks the language of both humans and AI. Those who adapt to this new paradigm will not only stay relevant, they’ll set the stage for dominating future search experiences. Embracing LLM optimization today ensures that your brand will lead in the AI-powered world of tomorrow.

But this shift also opens up new opportunities. As AI-driven search continues to grow, it’s no longer about competing with hundreds of other brands for the same set of keywords; it’s about understanding and anticipating what AI is looking for and positioning your content to be the preferred answer.

The time to rethink your SEO strategy is now.

With LLM optimization, you can future-proof your content, ensuring your brand stands out in an AI-dominant search landscape. This is your chance to lead the charge.

Ready to optimize your content for AI-driven search?

Reach out to Revv Growth today to build an AI-first content strategy that drives results and positions your brand for success in the next era of SEO.

.svg)

.png)

.webp)