Introduction

According to a recent survey by SurveyMonkey, 69% of marketers are excited about the potential of AI, and 60% are optimistic about the impact it will have on their jobs. On the surface, these numbers suggest that AI is the future of marketing, no questions asked.

Spend some time on Reddit, Quora, or other community conversations, and you’ll find a different narrative. Marketers actually using AI aren’t exactly thrilled. In fact, they’re raising serious concerns that don’t always make it to the headlines.

Take, for example, marketers frustrated by how AI fails to maintain the consistency and logical flow needed when creating marketing content. Others talk about how AI-generated content feels too generic, not personal enough to resonate with real people. These aren't just isolated complaints. They’re challenges faced by many marketers trying to make AI work in the real world.

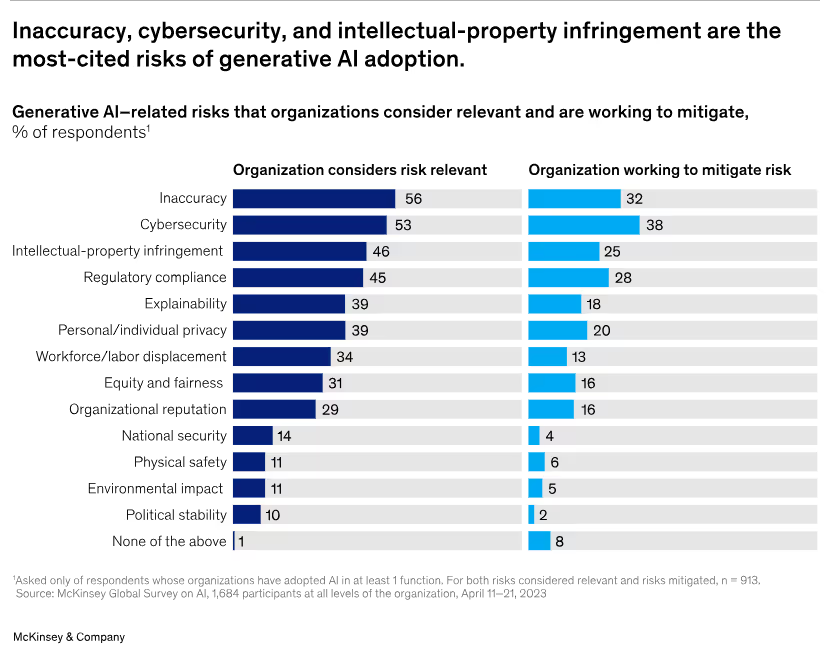

Content creation is just one part of the bigger picture. Marketers who are pushing AI deeper into their processes are raising alarms about issues like biased algorithms, transparency, and data privacy. These concerns are often brushed under the rug in mainstream discussions about AI’s benefits, but they’re just as important.

In this blog, we’ll take a closer look at these issues. We’ll cover the disadvantages marketers are facing, the limitations of AI in marketing, and the risks involved. Most importantly, we’ll discuss how to address these challenges as AI continues to evolve in the marketing world.

What are the Disadvantages of AI in Marketing?

As more marketers embrace AI, they’re finding that its limitations and risks are becoming harder to ignore. Whether it’s the lack of creativity, the reliance on data that may not be accurate, or concerns over privacy and ethics, AI in marketing comes with its fair share of challenges.

Lack of Real-world Understanding and Context

AI might sound smart, but it still has no sense of reality. It can’t grasp emotional tone, cultural nuance, or live human feedback. And as real users keep pointing out, that gap between appearance and actual understanding can cause real problems, especially in fields like marketing, customer support, and content creation, where tone and context matter just as much as information.

For example, in customer service, an AI chatbot might handle routine queries efficiently. But if a customer is frustrated, the AI can’t sense that frustration or adapt its response. It might provide a generic, robotic answer that further annoys the customer, worsening the experience.

This isn’t just a theoretical concern. In a Reddit thread discussing the limits of AI, several users pointed out that tools like ChatGPT don’t actually know anything. They just stitch together responses based on patterns in language.

One commenter put it simply saying that the AI doesn’t experience the world. It hasn’t touched water or seen a glass fall. It just knows that words like 'glass,' 'spill,' and 'wet' often appear together. That’s not understanding. It’s just recognizing the pattern.

In the same thread, multiple users also criticized how AI is being used in serious contexts like analyzing government contracts by people who don’t understand its limits. The results, they said, were useless at best, and dangerous at worst.

Relies on Human Input: Needs Continuous Prompting & Adjustment

AI, especially in marketing, is only as effective as the data and context it’s given. It doesn’t think on its own. It needs constant direction and input from humans. Unlike humans, AI doesn’t learn or adapt from the environment in real-time. It can only make decisions based on the instructions provided.

The Struggle with Repetitive Adjustments

It’s not surprising that many marketers are expressing their frustration online with one user bluntly saying “It’s funny how using AI seems to make my job worse”. The conversation is full of marketers calling out a shared pain point that AI doesn’t run on autopilot. It needs constant prompting, tweaking, and oversight.

While one user shared his pain saying that “My job has turned into constantly tweaking the AI’s tone, feeding it my materials, and editing the final output.” Another user pointed out that AI was supposed to take over the monotonous stuff. Instead, it’s trying to handle the creative parts and failing at it

“The “time-saving” promise becomes a cycle of writing prompts, fact-checking, and re-editing” says yet another user.

Whether it’s scripting, campaign copy, or content ideation, marketers have to constantly feed AI with updated instructions and context. Without it, the output lacks relevance, accuracy, or emotional nuance.

One user even coined the term prompting fatigue, as another user jokingly asked if he came up with it himself after being worn out from constantly prompting the AI. That term stuck, because it reflects exactly how many feel.

These real-world accounts reflect a simple truth that AI can’t think for itself. It can’t adapt to emerging trends, shifting buyer behaviors, or cultural context unless a human tells it to. This is especially risky in areas like customer segmentation or content personalization, where real-time context matters.

How We Optimized GPT Prompts for Content Creation at RevvGrowth

At RevvGrowth, we encountered the same challenges that many marketers face when experimenting with AI and prompting. Initially, the process felt like more of a hindrance than a help. AI could generate content, but the output often lacked the depth, nuance, and precision we needed. It was clear that relying on generic prompts alone wasn’t enough.

Instead of getting discouraged, we decided to make AI work for us. We took an iterative approach and turned the problem into an opportunity to refine our process. Over time, we developed a system where AI played a crucial role in every step of our content creation, but we didn’t automate blindly. We carefully tailored each part of the process to meet our specific needs.

We started by creating initial drafts of prompts that we felt would guide the AI. Then, we turned the AI loose to improve on those drafts. Through this continuous feedback loop, we refined and honed our prompts to the point where the output became detailed and highly relevant.

Now, we use custom prompts for each stage of blog content creation from SEO and keyword audits to blog editing. These prompts have become an integral part of our workflow, allowing us to create high-quality content, not just for ourselves but also for our clients. What started as a challenge became a strength, and today, our content creation process is fully automated yet still personalized to meet each client’s unique needs.

Ethical Concerns: An ongoing Debate

While AI is increasingly integrated into marketing and sales workflows, with 14% of teams already using it daily, over 90% of companies are concerned about the ethical implications of AI. Issues like bias, transparency, and fairness are becoming central to the conversation, and they’re unfolding rapidly as AI continues to play a bigger role in business.

Bias & Discrimination of AI Models

At its core, AI doesn’t think independently. It learns from what it’s fed. It means any biases in its training data become part of its logic.

A 2023 study on gender bias in large language models found that LLMs are 3 to 6 times more likely to associate certain jobs with gender stereotypes, like linking "nurse" to women and "engineer" to men.

This isn’t just a quirk. It’s a serious issue, especially in marketing where language, representation, and tone shape brand perception. When left unchecked, AI can reinforce outdated views, exclude audiences, and reflect discrimination that should have been left behind.

The Growing Debate about Bias of AI in Marketing

One of the Reddit threads debating about the ethical concerns with AI illustrates just how significant this issue is. Users discussed how OpenAI’s model, despite being positioned as "ethical," still harbors deep-rooted biases. Of course, there are users who defend these models.

Some argue that AI’s flaws are not a result of malice, but rather part of the natural evolution of technology. They claim that AI is merely learning from the data available to it, and improvements are on the horizon. While this viewpoint acknowledges the imperfections, it fails to address the fact that these biases are still present and can influence real-world applications like marketing.

Data Privacy: The Risk of Business & Consumer Data

A 2024 study on Ethical AI in Retail: Consumer Privacy and Fairness reveals that consumers are more worried than ever about how AI handles their personal data, demanding transparency and stronger safeguards.

One user admitted they treat AI tools as non-judgmental, even confessing personal details, forgetting that these models don’t exist in a vacuum. That misplaced trust raises red flags for businesses deploying AI without strict data governance.

Others worry about what companies might do with the prompts and responses logged by AI systems, especially in customer interactions, surveys, or internal decision-making.

For businesses, this means AI isn’t a plug-and-play solution. It requires clear privacy policies, secure infrastructure, and consumer trust. Otherwise, the legal and reputational risks could outweigh the benefits.

Mitigating the Risks: Best practices for Responsible AI in Marketing

AI in marketing holds immense potential, but to fully unlock its benefits without exposing businesses or consumers to risks, responsibility is key. Here are actionable steps marketers can take to mitigate the ethical, privacy, and bias-related risks when using AI.

Protecting Sensitive and Confidential Information

The first and most critical step in responsible AI use is safeguarding sensitive data. AI systems process vast amounts of personal and business data, making confidentiality a top concern. To ensure this data doesn’t fall into the wrong hands, implement strong data encryption, access controls, and anonymization techniques.

Regularly audit your AI tools to ensure compliance with data protection laws like GDPR and CCPA. Use secure cloud storage and avoid sharing sensitive data with third-party services unless absolutely necessary. For example, a marketing firm could encrypt customer data before feeding it into an AI system for segmentation to prevent unauthorized access.

- Implement data encryption to secure sensitive information both in transit and at rest.

- Establish strict access controls to ensure only authorized personnel can access confidential data.

- Regularly audit AI systems for compliance with data protection regulations like GDPR and CCPA.

- Use secure cloud storage and avoid sharing data with third-party services unless necessary.

Avoid Overfeeding AI with Biased or Incomplete Data

AI models are only as good as the data they are trained on. If fed with biased or incomplete data, AI can generate inaccurate or discriminatory results. This is a serious risk, especially when AI is used for customer segmentation or content personalization.

Regularly review and diversify the data used to train AI models. Avoid historical data that may contain embedded biases and instead use data that reflects current trends and demographics.

For instance, when training an AI system for customer targeting, include diverse customer profiles across different ethnicities, genders, and socioeconomic backgrounds to avoid reinforcing stereotypes.

- Diversify training data to include a broad range of customer profiles across various demographics.

- Review historical data for inherent biases and adjust models accordingly.

- Incorporate real-time data to reflect current trends and consumer behaviors.

- Ensure ongoing data updates to prevent outdated information from skewing AI outputs.

Continuous Monitoring and Adjustment of AI Systems

Without continuous monitoring, AI models can drift, making predictions or decisions based on outdated or irrelevant data. This can lead to ineffective marketing campaigns or even ethical violations.

Set up a feedback loop to monitor the AI system’s performance and results. Regularly update the system based on new data, changing market conditions, or consumer feedback.

For example, at RevvGrowth, we use GPT for keyword analysis, but we don't fully automate the process. Instead, we have a detailed step-by-step approach to ensure that the keywords and SEO outlines generated by GPT align with our SEO strategies and our clients' specific objectives.

- Set up a feedback loop to track the performance and output of AI models regularly.

- Monitor AI predictions to ensure they align with the business’s objectives and customer expectations.

- Adjust AI models based on market changes, customer feedback, and new data.

- Track AI-generated content for accuracy, relevance, and potential issues like bias or inconsistency.

Feeding Complete and Contextual Data

AI performs best when it receives complete, accurate, and contextually rich data. This includes not just raw numbers but also the context behind those numbers, such as customer intent, behavior, and preferences.

Provide AI systems with well-rounded, contextual data. For example, when using AI for customer segmentation, ensure the model is fed with information about past purchasing behavior

This helps AI provide more relevant and actionable insights. A campaign using AI to create personalized email content should consider not only customer age but also their recent interactions with your brand to increase engagement.

- Provide comprehensive customer data that includes behaviors, preferences, and interaction history.

- Ensure that AI receives rich, contextual data beyond demographics, such as purchasing intent and previous engagement.

- Feed data into AI systems that mirror real-world scenarios for accurate decision-making.

- Regularly update datasets to ensure AI has access to the most up-to-date and relevant information.

Conclusion

The buzz around AI in marketing is loud but the real story is more complicated. For marketers on the ground, AI isn’t just a shiny new tool. It’s a system that still requires constant prompting, careful oversight, and ethical scrutiny.

The takeaway isn’t that AI should be avoided. It’s that it must be used responsibly, intentionally, and with a deep understanding of its limitations. Hype shouldn't replace judgment.

If AI is to serve marketing rather than derail it, marketers must stay in the driver’s seat, steering with clarity, not coasting on automation.

If you're facing roadblocks with AI, we can help. Book a free strategy call with our team to audit your AI workflows, identify hidden risks, and build a responsible, effective AI marketing plan.

FAQs

.svg)

.avif)

.webp)